Startup Stories

Lago Manifesto: Building billing shouldn't suck (for neither business nor eng)

Finn Lobsien • 5 min read

Dec 15, 2022

/12 min read

TL;DR: We thought we had made something people wanted. Our first startup was actually a vitamin.

Preamble:

After writing about our pivot (part 1 and part 2), we had dozens of founders contacting us, asking for more details. A lot of them were operating or considering moving in the “Reverse ETL space”. We thought writing an in-depth post-mortem would be a scalable way to share our learnings.

We joined YC in June 2021 (S21) pitching a “no-code data tool for growth teams, to segment and sync customer data” (see our application here). We had no product at the time, we shipped during the batch and started monetizing a few weeks later.

We ended up hard pivoting from this space six months later.

The team at Lago started rebuilding our new product: an open-source metering and usage-based billing API six months ago. And we have no intention to sunset this new product, this time. Although it’s technically the same company and the same name (we like to say we’re “Lago v2” now), the learning curve and the range of emotions was so intense, we feel as if Lago v2 is our second startup.

So.. what went wrong with our 1st endeavor ? How is Lago v2 different?

We interviewed 130+ growth marketers, and quickly gathered a waiting list of 1000+ growth folks who said they wanted more “autonomy” to manage data.

When we showed the product to them, and its benefits, they LOVED it. “Exactly what I wanted” was a common verbatim. That’s why we were surprised to see how hard it was for them to actually adopt the product. And here comes the key point we missed.

We thought their “blocker'' was that they didn’t know SQL and couldn’t query their database or their data warehouse.

We were wrong.

Growth marketers wanted more autonomy to manage their data, but were not necessarily willing to put the extra effort into: (i) understanding the structure of a database (dozens of tables), (ii) practice lightweight data modeling.

Most of them were not that comfortable with spreadsheets, pivot tables, vlookups, files with thousands of rows and dozens of sheets.

That’s what killed our user adoption. We identified the problem, but our solution wasn’t answering it well enough.

Could it be answered by software? I still wonder.

To summarize, we listened (too) “literally” to interviewees and assumed that “SQL literacy” prevented growth teams from accessing the data they needed. The truth is what prevents them is a mix between “data literacy” (data concepts, and how the data is collected and stored within each company) and “appetite to ramp up on data”.

That’s why most companies end up hiring “mid-tech” teams (business operations managers, analytics managers, who generally have an engineering varnish or background) to serve growth teams with data.

We had a technical growth team at Qonto (our previous company, a Fintech unicorn, meaning the growth team had their own engineering team, completely separate from the core engineering team). On top of that, the relationship with the core engineering team was smooth. This setup is very rare.

Indeed, most growth teams we connected with didn’t have their own engineering team (hence the value of the no-code tool we were building). In addition, we also discovered that the engineering team is generally very suspicious of growth folks approaching the data warehouse or the database.

We don’t really know if it’s a chicken or egg problem, but as a result, these growth teams didn’t have the data knowledge, nor the permissions to read the database, or create replicas, and it ended up making the adoption very difficult.

With Lago v1 (the no-code data tool for growth teams), we would often have excited growth teams willing to use our product, while the CTO dragged their feet to support the implementation: by lack of time/prioritization, or because they believed the growth team would mess up with the data, or create the wrong automations/segmentations by lack of data literacy. And we could really relate to that.

Once again, what I mean by “data literacy” is “what’s stored in which data table”, and “how these tables relate to each other”.

For instance, even with our no-code tool, if you wanted the “count of transactions” per user, you might have to identify 3 data tables within dozens and then join them (without using SQL, but still with a “vlookup” or “join” concept in mind).

We’ve seen few growth teams capable of this, and when they were, they would already be SQL proficient, or go for a “data engineering tool” like Hightouch, Census, or dbt.

To summarize, we built Lago v1 as the product we would have loved to use at Qonto, but our team was too specific (engineers reporting to the VP Growth, great relationship between the VP Growth & VP Engineering), aka the market was too small.

We viewed the “modern data stack” as a pure marketing concept (more details here). We underestimated it, big time (yes, it sounds dumb, but everything will always look obvious in hindsight).

It’s definitely a well-marketed concept, but it also reflected a more than solid trend: using a data warehouse as the main repository of data within a company and hiring data engineers had already become mainstream.

In this context, the teams that represented the largest and booming market were data engineering teams. These teams had more precise use cases than growth teams, and unlike growth teams, they had direct access to the data warehouse and the database, and the trust from the CTO.

Even worse, we quickly realized that “early-stage companies” would rarely have the need for a sophisticated data tool, as their growth teams - when there was one - mainly focused on user acquisition; while later stage companies would hire a data engineering team that would always prefer a devtool.

We still managed to sell Lago v1, but we did so with the uncomfortable feeling that our clients would outgrow us quickly. We felt like a HR tool for founders who don’t have a Head of Talent yet: lightweight, easy, generalist. We knew that as soon as the “real leader” would come in, our product would be one of the first to be replaced.

To summarize, we built for the wrong team, because we were passionate about bringing data to the growth teams, and because we overlooked the macro trends. As a result, we had a low product adoption rate, our “Annual Contract Value” was capped (early stage companies) and our market was too small (growth teams that are data-aware, but not too mature either).

It became clear at the end of 2021 that we should have picked another lane. We then evaluated many alleys.

Here is a shortlist:

We didn’t choose any of the options above. There are many reasons, but the bottom line is that our team wasn’t excited by it (more to it below).

After a lot of back and forth on a dozen ideas (it’s called “pivot hell” for a reason), only one really clicked, as in “why didn’t we build this in the first place?”.

That’s how “Lago v2” was born: Open Source Metering and Usage Based Billing.

At Qonto, we had built and scaled the home-grown billing system from scratch and scaled it to series D. The three back-end engineers who have led this on the tech side had joined Lago’s founding team, and we re-explored the reasons it was such a painful topic in our previous company. As the former VP Growth (I owned Revenue), I remember being very frustrated and limited by the pricing iterations I wanted to implement, and the data I wanted to extract or send from our billing system. The billing system is really the golden goose in terms of data: it gathers usage data, how much the users paid vs usage, how this changed over time.

At Qonto, we kept hiring back-end engineers to maintain our home-grown billing system as we grew, and it became increasingly complex with time.

It was originally scoped to be a three-month one-person and one-time project. The reality is that a dozen people are working on it full-time today. We had tried to use a third-party platform many times, but each time we evaluated vendors, we thought their solution didn’t cover enough of our edge cases to justify the migration.

Knowing that, and having learnt from our first failed product, this time we made sure our challenges were not specific to Qonto, and went deep into “why companies continue to build a home-grown billing system, while billing is a nightmare for engineers”.

We also published a simple blog post about “billing nightmares”, which completely blew up on Hacker News, and stayed #1 during 48 hours (777 points).

This time, we also put a lot of thought into what “solution” to this massive problem would work (again, learning from our first failed product), and this is how we forged a deep conviction about being open source (more on this here).

We often get the question “how and when do you know it’s the right idea?”.

I’m not sure there’s an absolute answer to this. Some companies had an initial product market fit, and then lost it, because they did not adapt fast enough. So.. it depends.

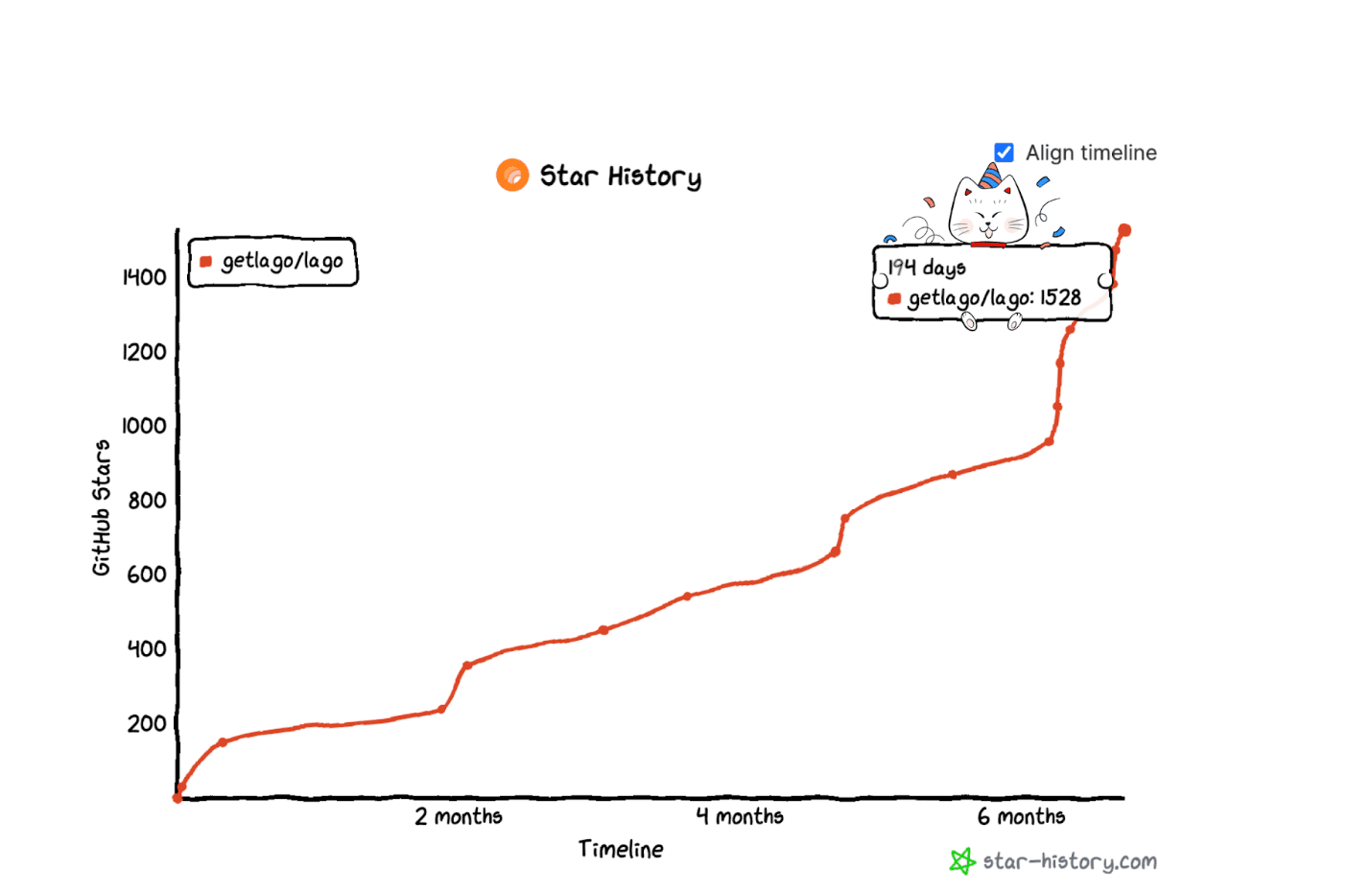

But in our very personal case, here is what happened. We opened our Github repository around 6 months ago. We haven’t relaunched officially yet, but Lago v2 already had a traction we never had with Lago v1:

It’s only the beginning, but this really is different from our first attempt: the market pull is stronger, and the fit with our approach is incomparable.

It looks simple put in bullet points, but it really was not. I just want to emphasize this if founders who’re pivoting are reading.

Putting your first product in the trash, and then rebuilding from zero, with the urgency to ship asap, and no guarantee of success is terrible for your mental health. It’s part of what we signed up for, as founders, but it’s tough. Especially if you have batchmates who found Product Market Fit straightaway, the comparison might be daunting. The pressure from investors can be strong as well. You might feel guilty towards your team as well, whether it’s rational or not. It’s messy.

I used to torture myself with a lot of “I should have known better”. Maybe. That’s why YC pushed you to launch asap by the way. Maybe if I hadn’t learnt from these mistakes, Lago v2 wouldn’t be born. We could have pivoted earlier (I should have known), or later (oh I made a good decision). The truth is, nobody knows, so I finally let it go.

What I do know is that we would have regretted it had we given the money back, knowing we had sufficient runway to re-launch and the team stuck with us.

I don’t have a secret formula for overcoming this very messy period, but here is what made us push through, in case it can help:

If you have more questions about what we learned from building these products, both Raffi and I are happy to answer them.

Bonus: Quick notes about the reverse ETL space, in case you’re not familiar with it.

We did not even know what a reverse ETL was, when we enrolled in the S21 batch, nor did we know our startup would be attached to this category either.

Our one-liner was “a no-code data tool for growth teams, to segment and sync customer data”.

The basic idea is: “Companies have a lot of data scattered across a lot of different tools. They need to centralize it, to perform growth campaigns, based on users’ behavior”.

In plain English:

A reverse ETL focuses on step 4, as stated by Hightouch (the leading Reverse ETL)

Reverse ETL is the process of copying data from your central data warehouse to your operational tools, including but not limited to SaaS tools used for growth, marketing, sales, and support.

Who performs Steps 1, 2, 3, and using which tools?

A data engineer usually does, they extract data from the business tools, using an ETL (step 1), transform the data (step 2 and 3) in a data warehouse, and use a reverse ETL for step 4.

Our first product addressed the 4 steps, but the main use case of Lago was “step 4”. More on that later.

Having all the data at the same place, cleaned and usable to everyone in the organization, is a challenge anyone can relate to. Business users always need more data, Engineers hate data plumbing.

This universality makes it a huge market and known problem. Building in a crowded market should never deter you. Indeed, after some research, every market is relatively crowded, and having several companies evangelizing the market can be a good thing… and we went all-in.